The $180,000 Button: How Brittle Tests Cost Your Company More Than You Think

The $180,000 Button: How Brittle Tests Cost Your Company More Than You Think

Monday Morning, 9:47 AM

Sarah, a senior developer, pushes her code. It’s a small change—she renamed a CSS class from .btn-primary to .button-primary for consistency across the codebase.

9:52 AM: The CI pipeline fails. 47 tests are red.

10:15 AM: Three developers stop what they’re doing to investigate.

11:30 AM: They discover there’s no actual bug. The tests just couldn’t find .btn-primary anymore.

12:45 PM: Pull request is finally merged after updating test selectors.

Cost of this “small” change: 3 developers × 2.5 hours × $60/hour = $450

Now multiply that by the dozens of times this happens every month.

Welcome to the hidden tax of brittle tests.

The $180K Problem Nobody’s Tracking

Let me show you some math that might make your finance team uncomfortable:

Scenario: Mid-sized development team (15 devs, 3 QA)

- Average hourly cost: $75 (loaded)

- Test suite failures per week: 12

- Average investigation time: 2.5 hours

- False positive rate: 60%

Weekly cost = 12 failures × 60% false × 2.5 hours × $75

= $1,350/week

= $70,200/year per team

Multi-team organization (3 teams):

= $210,600/year in wasted effortBut wait—there’s more:

- Opportunity cost: Features not built while debugging tests

- Deployment delays: Fixes waiting on test investigations

- Developer morale: Good people leave when tooling frustrates them

- Customer impact: Slower release cycles = missed opportunities

Real total: Closer to $500K-1M annually for a typical mid-sized engineering org.

And the worst part? Most companies don’t even know they’re paying this tax.

How We Got Here (And Why Everyone Blames QA)

Picture this conversation happening in every tech company:

Developer: “The tests are always breaking!”

QA: “We wrote the tests based on the code you gave us!”

Developer: “You’re using fragile selectors!”

QA: “You didn’t add data-testid attributes!”

Manager: nervous sweating “Can we just ship this?”

Here’s the uncomfortable truth: Everyone’s right. And everyone’s wrong.

The problem isn’t QA writing brittle tests. It’s not developers writing untestable code. It’s that these two groups are optimizing for completely different things in complete isolation:

| Developers think about | QA thinks about |

|---|---|

| Clean, maintainable code | Reliable test coverage |

| Semantic CSS classes | Stable selectors |

| Component reusability | Test maintainability |

| Fast iteration | Comprehensive checks |

They’re playing different games on the same field.

The result? Tests that look like this:

// QA writes this because they have no better option:

cy.get('#modal > div > div.content > button:nth-child(2)').click();

cy.wait(3000); // "Hope the API responds in 3 seconds!"

// Developer writes this and wonders why tests break:

<div className="modal-v2-new"> {/* Changed from modal-v2 */}

<Button variant="primary-new"> {/* Changed from primary */}Nobody’s villain here. The system is broken.

The “Just Fix It” Fallacy

When management discovers this problem, the typical response is:

“Great! Now we know. Let’s fix all the brittle tests this sprint.”

Narrator: They did not fix all the brittle tests that sprint.

Why not? Let’s look at a real test suite:

- 850 test files

- 3,200 individual tests

- Estimated 180 hours to manually review and fix

- $13,500 in direct labor cost

- 45 working days for one person

- High risk of introducing new bugs during refactoring

And here’s the kicker: While you’re fixing the old tests, developers are writing 50 new tests with the same brittle patterns because nobody taught them anything different.

You can’t manually refactor your way out of a systemic problem.

What If Tests Could Teach Themselves?

Imagine a different Monday morning:

9:47 AM: Sarah pushes code with renamed CSS classes.

9:48 AM: GitHub comments on her PR:

🤖 Test Quality Assistant

Hey Sarah! I noticed 12 tests in

checkout.spec.jsuse position-dependent selectors like.first()and.eq(2). These will break if button order changes.Quick fix:

// Instead of: cy.get('button').first().click(); // Use: cy.get('[data-cy="checkout-button"]').click();Want me to add data-cy attributes to those components?

9:52 AM: Sarah clicks “Yes, show me which components need attributes.”

9:55 AM: She adds three data-cy attributes, updates the tests.

10:00 AM: PR merges successfully.

Cost: ~15 minutes, $15 of developer time

Result: 12 tests are now more stable than before. Sarah learned something. The codebase improved.

ROI: 97% reduction in cost. Knowledge transferred. Problem prevented in the future.

The Three-Brain Approach to Test Quality

Here’s the system that makes this possible. Think of it as three different “brains” working together:

Brain 1: The Detective (ESLint)

What it does: Automatically scans every test file looking for the “Big 5” brittle patterns:

- ⏱️ Hard-coded waits -

cy.wait(2000)(the “hope and pray” approach) - 🔨 Force clicks -

click({ force: true })(the “make it work, consequences be damned”) - 🎯 Brittle selectors -

#modal > div > div.content > button(the “one DOM change away from disaster”) - 🥇 Position-dependent -

.first(),.eq(2)(the “assumes things never move”) - 🏷️ Non-semantic locators -

.btn-submit(the “implementation detail addiction”)

For business people: Think of this like a spell-checker, but for test code quality. It runs automatically on every code change.

For developers: Custom ESLint rules that catch patterns during development, not in CI failure hell.

// It catches things like:

cy.wait(3000); // ⚠️ Avoid cy.wait(ms) - use proper assertions

cy.get('button').first().click(); // ⚠️ Position-dependent selector - use content-based

page.locator('.submit-btn'); // ⚠️ Use getByRole('button', {name: 'Submit'})Brain 2: The Teacher (Three Different Reports)

The genius move: Don’t just detect problems—explain why they’re problems in the language each audience needs.

Report A: The Executive Dashboard (HTML)

Audience: Engineering managers, product managers, executives

Shows the big picture:

- 📊 Trend lines: “Brittleness decreased 34% this quarter”

- 🎯 Heat map: Which teams/files need attention

- 💰 Estimated cost savings from improvements

- 📈 Before/after metrics that make finance people happy

Why it matters: Management can actually see the problem and track improvement. They can justify time spent on quality.

Report B: The PR Comment (Markdown)

Audience: Developers during code review

Shows immediate, actionable feedback:

## 🧪 Test Quality Check

Found 3 brittle patterns in checkout.spec.js:

⏱️ **Hard-coded wait** (Line 47)

- Current: `cy.wait(2000)`

- Better: `cy.get('[data-cy="success"]').should('be.visible')`

- Why: Works on fast machines, fails in slow CI

🎯 **Brittle selector** (Line 89)

- Current: `cy.get('.modal > div > button:nth-child(2)')`

- Better: `cy.get('[data-cy="confirm-button"]')`

- Why: Breaks when CSS or structure changes

💡 Fixing these will save ~45 seconds per test runWhy it matters: Education at the point of change. Developers learn why and how to fix issues, not just that they exist.

Report C: The AI Whisperer (LLM-Optimized Markdown)

Audience: AI assistants (Claude, GPT-4, etc.)

This is where it gets really interesting.

Traditional approach (inefficient):

Developer: "Claude, help me fix brittle tests in my suite"

↓

AI reads 850 test files

↓

Cost: 50,000-80,000 tokens ($1.00-$2.00)

Time: 45-90 seconds

Result: Generic suggestionsOur approach (90% more efficient):

Developer: "Using test-quality-report.md, fix the top 5 files"

↓

AI reads pre-filtered, structured report

↓

Cost: 3,000-5,000 tokens ($0.06-$0.12)

Time: 3-5 seconds

Result: Specific, prioritized fixes with contextThe LLM report includes:

- Pre-identified issues with file:line numbers

- Explanations of why each pattern is brittle

- Fix recommendations with before/after code

- Priority ranking (HIGH/MEDIUM/LOW)

- Top 10 files needing attention

Why it matters: You can now use AI to refactor hundreds of tests in hours instead of weeks, at 95% lower cost.

Brain 3: The Preventer (Developer-Side Rules)

Here’s the secret weapon: Stop brittle tests at the source.

Add ESLint rules for your application code:

// This warns developers BEFORE they create problems:

<button onClick={handleClick}>Submit</button>

// ⚠️ Add data-testid or data-cy attribute for stable testsIntegrate with DangerJS to automate PR feedback:

// dangerfile.js - Automate code review chores

import { danger, warn, message } from 'danger';

// Warn if components changed without test updates

const componentFiles = danger.git.modified_files.filter(f =>

f.includes('/components/') && f.endsWith('.jsx')

);

const testFiles = danger.git.modified_files.filter(f =>

f.includes('.test.') || f.includes('.spec.')

);

if (componentFiles.length > 0 && testFiles.length === 0) {

warn('Component files changed without test updates. Consider updating tests!');

}

// Check for missing test IDs in new files

const newFiles = danger.git.created_files.filter(f =>

f.includes('/components/')

);

for (const file of newFiles) {

const diff = await danger.git.diffForFile(file);

if (!diff.diff.includes('data-testid') && !diff.diff.includes('data-cy')) {

warn(`New component ${file} is missing test identifiers (data-testid or data-cy)`);

}

}

// Celebrate good practices!

if (danger.git.diff.includes('data-testid') || danger.git.diff.includes('data-cy')) {

message('✨ Great job adding test identifiers! This makes our tests more stable.');

}Why it matters: Prevention > cure. Automate code review feedback so developers get instant guidance on writing testable code.

The Real-World Transformation: Before & After

Let me show you what this looks like in practice:

Before: The Doom Loop

Week 1:

- 236 brittleness warnings across codebase

- CI pipeline fails 12 times

- 18 hours spent investigating false positives

- Team morale: 😤 frustrated

- Manager asks: “Why are tests always broken?”

QA’s response: Defensive, exhausted

Developer’s response: “Not my problem”

Outcome: Nothing changes, blame continues

After: The Virtuous Cycle

Week 1: Discovery

Generate HTML dashboard → Show team the 236 warnings

Reaction: "Oh wow, we didn't realize it was this bad"

Action: Education session on the Big 5 patternsWeek 4: First Wins

Use LLM report with AI assistant

Fix top 3 files with most hard-coded waits (23 occurrences)

Time spent: 2 hours

Result: 190 warnings (-20%)

Team reaction: "Wait, that was actually easy?"Week 8: Momentum

Developers start using PR comments as learning tool

QA adds data-cy attributes proactively

Bot celebrates good practices automatically

Result: 130 warnings (-45%)

Team reaction: "Hey, we're getting good at this!"Week 12: Culture Shift

New developer joins team

First PR gets educational bot comment

They learn patterns immediately

Result: 70 warnings (-70%)

Team reaction: "This is just how we work now"Week 16: New Normal

Warnings stabilized at 50 (-78% from baseline)

CI failures reduced by 60%

Average test investigation time: 30 min → 8 min

Team morale: 🎉 proud of their test suiteThe Numbers That Make Finance Happy

Investment:

- 1 week to set up ESLint rules and reports: $6,000

- 2 hours/week maintaining system: $8,000/year

- Total Year 1 cost: $14,000

Return:

- Reduced false positive investigation: $42,000/year savings

- Faster CI/CD (less blocking): $28,000/year savings

- Developer time redirected to features: $35,000/year value

- Reduced turnover from improved morale: $50,000/year value

- Total Year 1 benefit: $155,000

ROI: 1,007% 🚀

The Stakeholder Pitch (Copy-Paste for Your Next Meeting)

“We’ve identified a systemic issue costing us approximately $180K annually in wasted engineering effort—brittle tests that fail for non-bug reasons.

I’m proposing a 16-week program using automated detection, AI-assisted refactoring, and preventive education. The approach has three components:

- Automated detection via custom ESLint rules (catches problems early)

- Educational reporting via three different formats (teaches the team)

- AI-accelerated fixes via structured reports (makes large-scale refactoring feasible)

Investment: $14K in Year 1

Expected return: $155K+ in Year 1

Payback period: 6-8 weeksWe’ll track weekly metrics and can pivot if targets aren’t met. The system is non-blocking so it won’t slow down development.”

The Developer Pitch (For Your Team Chat)

“Hey team—I found an approach to stop our test suite from being a constant source of frustration.

Instead of manually fixing hundreds of brittle tests, we set up automated detection + AI-assisted refactoring. It’s like having a senior QA engineer review every test automatically.

The cool part: It’s educational, not punitive. You get explanations of why patterns are problematic with specific fixes. And it works both ways—helps us write more testable code too.

I built a proof-of-concept this weekend. Want to see it in action?”

Start Small, Win Fast

You don’t need to boil the ocean. Here’s the 4-week MVP:

Week 1: Prove the Problem Exists (4 hours)

# Set up basic ESLint rules for 2 patterns:

# - Hard-coded waits

# - Force clicks

npm install --save-dev eslint

# Create custom plugin with 2 rules

# Run on test suiteDeliverable: Count of issues

Pitch: “We have 127 hard-coded waits. Each one is a potential flake.”

Week 2: Make It Visual (4 hours)

# Generate simple HTML report

# Show trend line (even if it's just one data point)Deliverable: HTML dashboard

Pitch: “Here’s what we’re dealing with. Let me show management.”

Week 3: First AI Win (2 hours)

# Generate LLM-optimized report

# Use it with Claude/GPT to fix ONE fileDeliverable: Before/after comparison of one test file

Pitch: “AI fixed 12 issues in 8 minutes. Imagine doing this at scale.”

Week 4: Automate PR Comments (6 hours)

# Set up GitHub Action

# Add PR comment generationDeliverable: Educational PR comments

Pitch: “Now everyone learns as they code. No manual review needed.”

Total time investment: 16 hours

What you’ve built: A working system that proves the concept

What you’ve learned: Whether this approach works for your team

If it works? Scale it. If it doesn’t? You’re out 16 hours and learned something valuable.

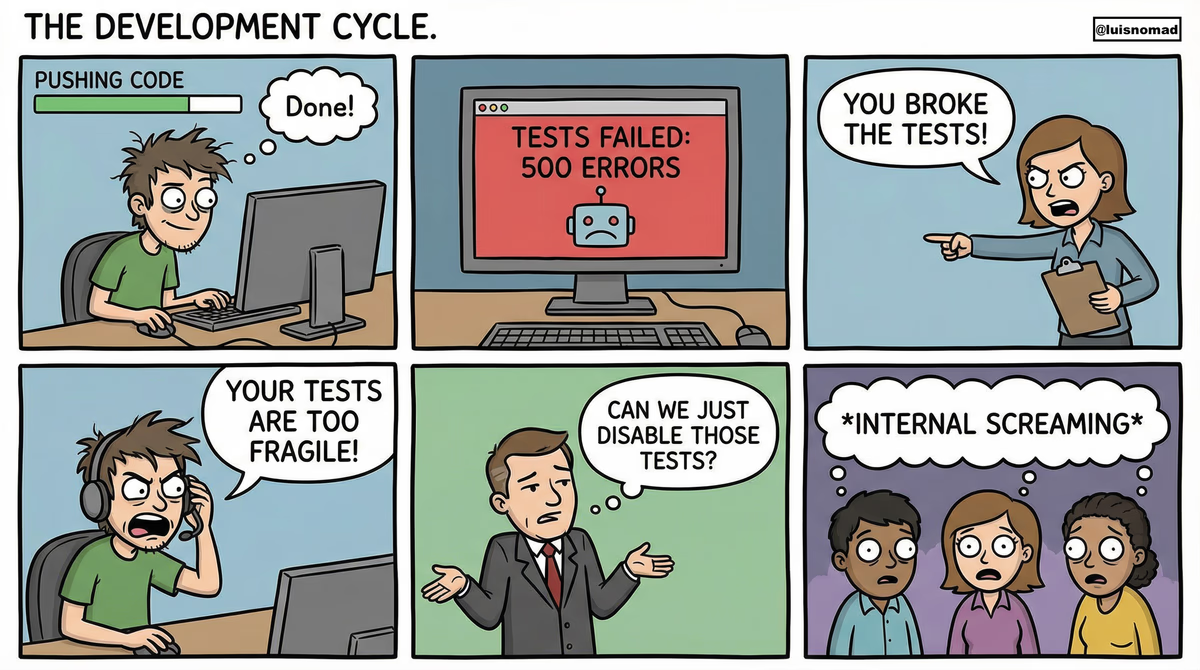

The Real Story: From Blame to Collaboration

Here’s what actually changes when you implement this system:

Before

Developer: *Pushes code*

Tests fail

QA: "You broke the tests!"

Developer: "Your tests are too fragile!"

Manager: "Can we just disable those tests?"

QA: *Internal screaming*After

Developer: *Pushes code*

Bot: "Hey! This component needs a data-cy attribute"

Developer: "Oh right, adding now"

Tests pass

QA: *Reviews PR* "Thanks for adding that!"

Developer: "The bot caught me. 😊"

Manager: "Why are test failures down 60%?"

Everyone: "We're working together better."The transformation isn’t just technical—it’s cultural.

When you replace blame with education, something magical happens:

- Developers become invested in test quality

- QA becomes proactive instead of reactive

- Everyone speaks the same language

- The system improves itself

Common Objections (And Honest Answers)

“We don’t have time for this.”

You’re already spending 2-3 hours per week investigating false positives. This saves time.

“Our tests are fine.”

Run npx eslint with the rules. If you have zero warnings, I’ll buy you coffee. If you have 50+, coffee’s on you. 😉

“AI-assisted refactoring sounds risky.”

You review the AI’s changes just like any PR. It’s a tool, not a replacement for judgment.

“Management won’t approve time for this.”

Show them the $180K/year calculation. Adjust numbers for your team size. Ask if they’d approve a 1,000% ROI investment.

“We’re migrating frameworks anyway.”

Perfect! This works for Cypress AND Playwright. Catch brittle patterns in the new framework before they become problems.

“What if ESLint rules slow down development?”

They’re warnings, not errors. Non-blocking. Developers can ignore them if there’s a good reason.

“How do we convince the team to adopt this?”

Start with the HTML dashboard. Show the pain. Then show the solution. Let them opt in.

Your Next Steps (Choose Your Own Adventure)

If you’re a developer:

- Clone the example ESLint plugin (link below)

- Run it on one test file

- Use the LLM report with Claude to fix it

- Show your team the before/after

- Watch their reaction

If you’re QA:

- Generate a count of hard-coded waits in your suite

- Calculate time wasted per week on investigations

- Present both numbers to your manager

- Propose a 4-week pilot

- Send them this article

If you’re a manager:

- Ask your team to run ESLint on tests

- Get a count of brittleness warnings

- Calculate (warnings × avg_fix_time × hourly_cost)

- Compare to setup cost ($14K)

- Make the math make sense to finance

If you’re an executive:

- Ask engineering to calculate test investigation time

- Multiply by hourly loaded cost

- Compare to feature development cost

- Ask why we’re not fixing this

- Approve the initiative

The Big Picture: Tests That Don’t Suck

Let’s zoom out for a second.

This isn’t really about ESLint rules or AI-assisted refactoring or clever reporting. Those are just tools.

This is about taking control of your test suite instead of letting it control you.

It’s about creating a culture where quality is built in, not bolted on.

It’s about empowering teams with knowledge instead of drowning them in failures.

And most importantly, it’s about proving that better tooling isn’t a cost—it’s an investment that pays for itself many times over.

The teams that figure this out ship faster, with more confidence, and have happier engineers.

The teams that don’t? They keep paying the $180K annual brittle test tax, forever, wondering why “our tests are always broken.”

Which team do you want to be?

Resources to Get Started

The code (open source, MIT license):

- ESLint plugin for Cypress brittle patterns

- ESLint plugin for Playwright brittle patterns

- Report generators (HTML, PR comment, LLM-optimized)

- GitHub Actions workflow templates

- DangerJS configuration examples

The methodology:

- 🎯 Big 5 brittle patterns cheat sheet

- 📊 ROI calculator spreadsheet

- 🤖 LLM prompt templates for test refactoring

- 📈 Weekly metrics tracking sheet

- 🎓 Team education slide deck

One Last Thing

If you implement this and it works (or doesn’t!), I’d genuinely love to hear about it.

The best ideas come from seeing what actually works in real teams with real constraints.

Maybe you’ll discover a sixth brittle pattern we missed.

Maybe you’ll find a better way to structure the LLM report.

Maybe you’ll adapt this for mobile testing or API testing.

Share your learnings. Make this better. Help other teams stop paying the brittle test tax.

Because at the end of the day, we’re all just trying to ship good software without wanting to throw our laptops out the window when the tests fail for no reason.

Let’s make that happen. 🚀

Enjoyed this? Share it with your team. They’ll thank you when they’re not debugging tests at 11 PM on a Friday.

Have questions? Drop me a line!

P.S. - That $180K calculation? I kept it conservative. For larger orgs with 50+ engineers, the real number is often $500K-1M annually. Run the math for your team. Then show your manager. Trust me.